In today’s data-driven world, organizations are inundated with vast volumes of data. To extract meaningful insights from this deluge, robust data processing frameworks are essential. Hadoop, an open-source software framework, has emerged as a cornerstone for handling big data.

Understanding Hadoop: A Deep Dive

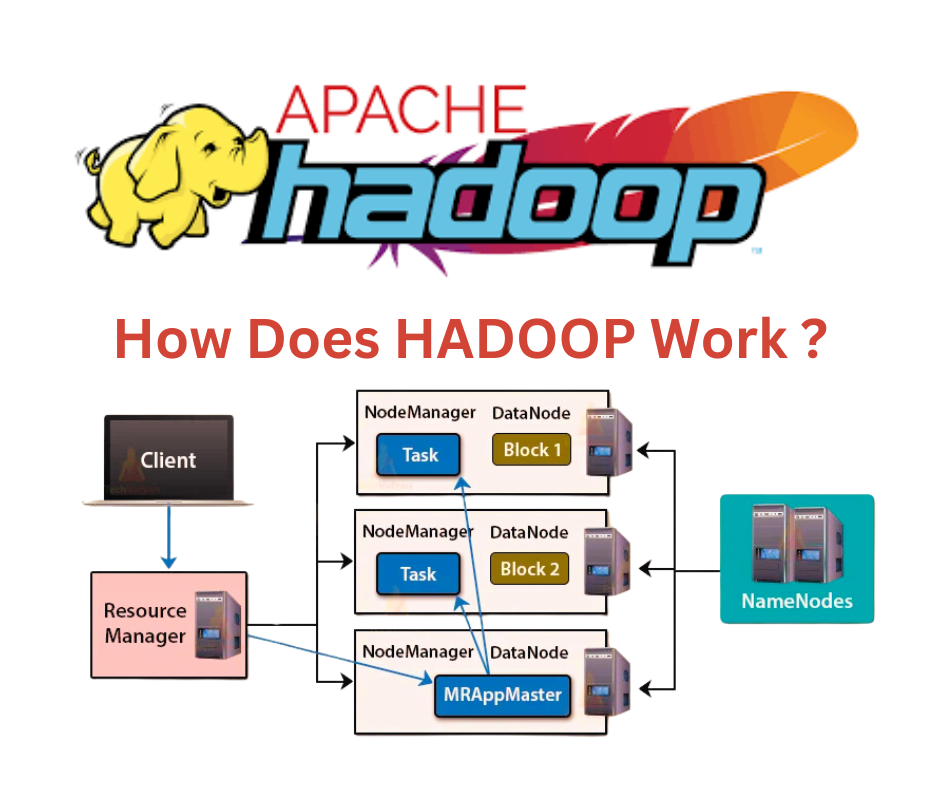

Hadoop is a distributed computing framework designed to store and process massive amounts of data across clusters of computers. It comprises two core components:

- Hadoop Distributed File System (HDFS): A distributed file system that stores data across multiple nodes in a cluster, providing fault tolerance and scalability.

- MapReduce: A programming model for processing large datasets in parallel across clusters of computers.

Why Hadoop is a Boon for Big Data

The advent of big data has presented unprecedented challenges and opportunities for businesses. Hadoop addresses these challenges head-on by offering:

- Scalability: Handles massive datasets with ease by distributing processing across multiple nodes.

- Cost-Effectiveness: Offers a cost-efficient solution for storing and processing large volumes of data.

- Fault Tolerance: Ensures data integrity and availability through redundancy.

- Flexibility: Supports a wide range of data formats and processing tasks.

- Open Source: Provides a free and customizable platform for development.

Real-World Applications of Hadoop

Hadoop has found applications across various industries, including:

- Retail: Analyzing customer purchasing behavior, inventory management, and fraud detection.

- Finance: Processing financial transactions, risk assessment, and fraud prevention.

- Healthcare: Analyzing medical records, drug discovery, and population health.

- Telecommunications: Network optimization, customer churn analysis, and fraud detection.

- Government: Data analysis for public policy, census data processing, and national security.

By leveraging Hadoop, organizations can gain a competitive edge by extracting valuable insights from their data and making data-driven decisions.

The Future of Hadoop

While Hadoop has been a pioneer in big data processing, the landscape is constantly evolving. The integration of Hadoop with advanced technologies like machine learning, artificial intelligence, and cloud computing is opening up new possibilities.

In conclusion, Hadoop remains a powerful tool for handling big data challenges. Its ability to scale, process vast datasets, and provide cost-effective solutions makes it an indispensable asset for organizations seeking to harness the power of their data.

Would you like to explore specific Hadoop components or use cases in more detail?

Do a certified course on Hadoop from this academy